Talk@UWaterloo - Steering Towards Safe Human-AI Interactions

Changliu gave a talk at University of Waterloo, titled Steering Towards Safe Human-AI Interactions.

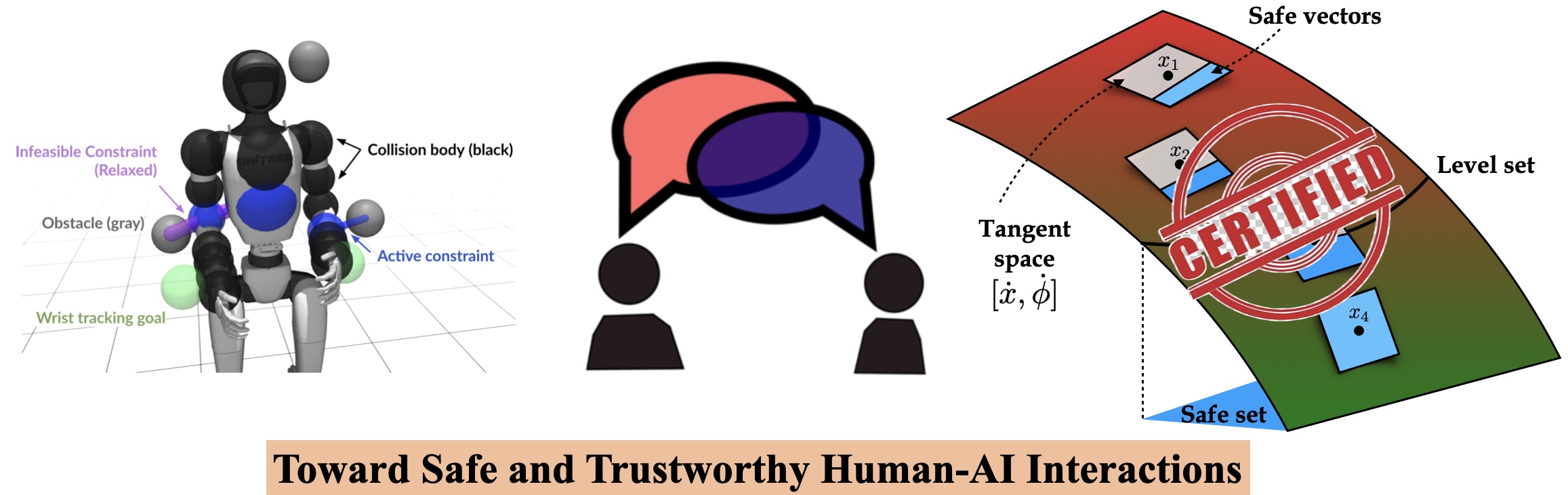

Abstract: As generative AI and robotics increasingly integrate into daily life, ensuring their safe interaction with humans remains a critical challenge. Safety concerns extend beyond physical interactions—such as preventing collisions—to conversational safety, where AI must avoid exchanging harmful or dangerous information. In this talk, I will discuss how we frame these challenges as constraint satisfaction problems and address them using forward invariance principles from control theory. The first line of work I will discuss focuses on Physical Safety. I will introduce SPARK, a comprehensive toolbox and benchmark designed to ensure safety in humanoid autonomy and teleoperation. The second line of work addresses Conversational Safety. Large language models (LLMs) are highly vulnerable to multi-turn jailbreaking attacks, where contextual drift gradually leads them away from safe behavior. To mitigate this, we propose a safety steering framework grounded in control theory to maintain invariant safety in multi-turn dialogues. Lastly, as many safety certificates are learned via neural networks, a critical question arises: how can we certify Neural Safety Certificates? I will discuss formal verification methods designed to provide guarantees on their reliability. By applying control-theoretic safety principles across diverse domains—from physical robot safety to conversational AI—we aim to build AI systems that interact with humans in both safe and trustworthy ways.

This talk highlights the following work from ICL:

-

[C101] SPARK: Safe Protective and Assistive Robot Kit

Yifan Sun, Rui Chen, Kai S Yun, Yikuan Fang, Sebin Jung, Feihan Li, Bowei Li, Weiye Zhao and Changliu Liu

IFAC Symposium on Robotics, 2025

-

[U] Dexterous Safe Control for Humanoids in Cluttered Environments via Projected Safe Set Algorithm

Rui Chen, Yifan Sun and Changliu Liu

arXiv preprint arXiv:2502.02858, 2025

-

[C81] Verification of Neural Control Barrier Functions with Symbolic Derivative Bounds Propagation

Hanjiang Hu, Yujie Yang, Tianhao Wei and Changliu Liu

Conference on Robot Learning, 2024