Enhancing and Verifying the Robustness of Learning-based Systems

Overview:

Deep Neural Networks (DNNs) play a crucial role in many autonomous systems, particularly in safety-critical applications like autonomous driving. Consequently, it is essential for these learning-based systems to be reliable when making decisions or supporting human decision-making. In this project, we focus on robustness as a key property—ensuring that the outputs of neural networks or learning-based systems remain stable when their inputs are subject to perturbations. Specifically, we aim to address the following research objectives:

Creating a comprehensive and user-friendly toolbox that incorporates state-of-the-art methods for verifying various types of DNNs and their associated safety specifications.

Enhancing the robustness of DNNs during the training phase to facilitate easier verification processes.

Certifying the robustness of learning-based systems, extending beyond just the neural network itself.

Research Topics

Improve Certified Training to Enhance Certified Robustness

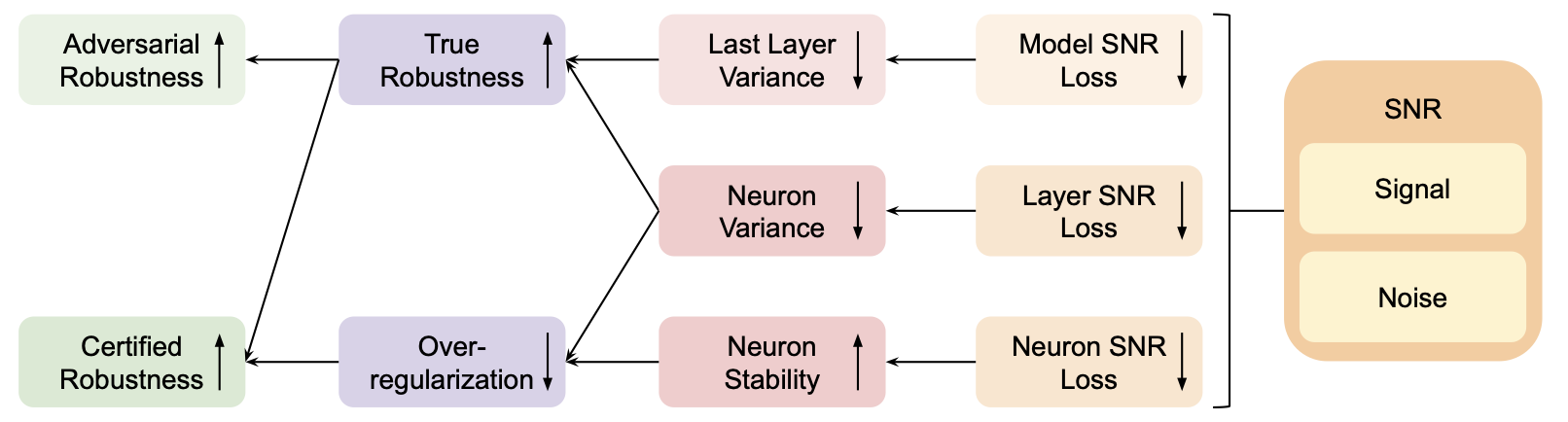

Neural network robustness is a major concern in safety-critical applications. Certified robustness provides a reliable lower bound on worst-case robustness, and certified training methods have been developed to enhance it. However, certified training methods often suffer from over-regularization, leading to lower certified robustness. This work addresses this issue by introducing the concepts of neuron variance and neuron stability, examining their impact on over-regularization and model robustness. To tackle the problem, we extend the Signal-to-Noise Ratio (SNR) into the realm of model robustness, offering a novel perspective and developing SNR-inspired losses aimed at optimizing neuron variance and stability to mitigate over-regularization.

Contributors: Tianhao Wei, Ziwei Wang, Peizhi Niu, Abulikemu Abuduweili, Weiye Zhao, Casidhe Hutchison, Eric Sample.

Contributors: Tianhao Wei, Ziwei Wang, Peizhi Niu, Abulikemu Abuduweili, Weiye Zhao, Casidhe Hutchison, Eric Sample.

Publications:

-

[J26] Improve Certified Training with Signal-to-Noise Ratio Loss to Decrease Neuron Variance and Increase Neuron Stability

Tianhao Wei, Ziwei Wang, Peizhi Niu, Abulikemu Abuduweili, Weiye Zhao, Casidhe Hutchison, Eric Sample and Changliu Liu

Transactions on Machine Learning Research, 2024

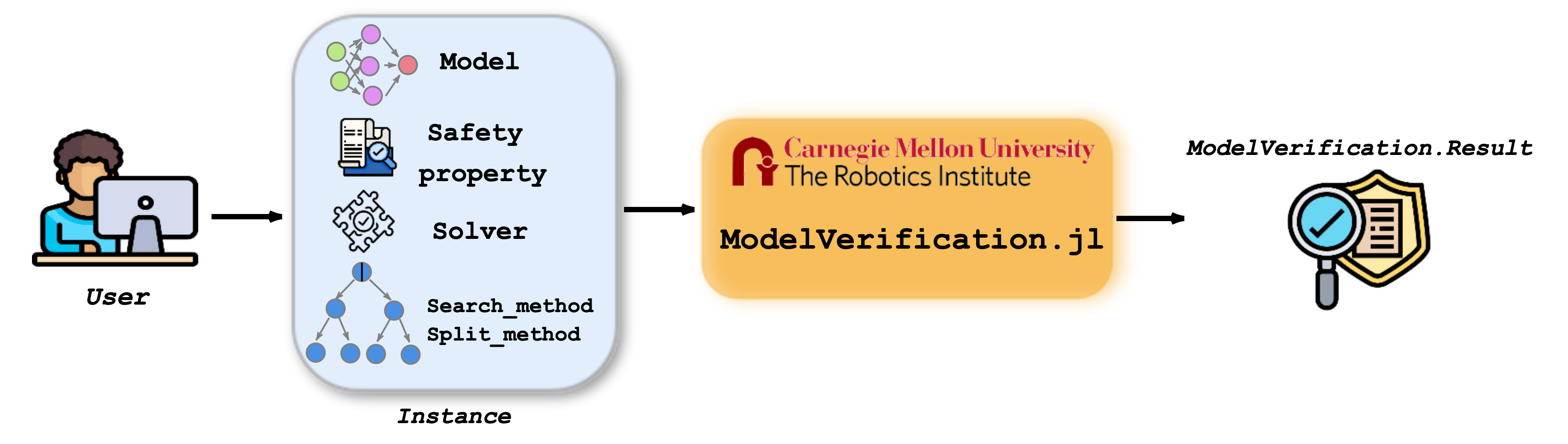

Develop Toolbox for Formally Verifying DNNs

Deep Neural Networks (DNN) are crucial in approximating nonlinear functions across diverse applications, ranging from image classification to control. Verifying specific input-output properties can be a highly challenging task due to the lack of a single, self-contained framework that allows a complete range of verification types. To this end, we present ModelVerification.jl (MV), the first comprehensive, cutting-edge toolbox that contains a suite of state-of-the-art methods for verifying different types of DNNs and safety specifications. This versatile toolbox is designed to empower developers and machine learning practitioners with robust tools for verifying and ensuring the trustworthiness of their DNN models.

Contributors: Tianhao Wei, Luca Marzari, Kai S. Yun, Hanjiang Hu, Peizhi Niu, Xusheng Luo

Contributors: Tianhao Wei, Luca Marzari, Kai S. Yun, Hanjiang Hu, Peizhi Niu, Xusheng Luo

Publications:

-

[C100] Modelverification. jl: a comprehensive toolbox for formally verifying deep neural networks

Tianhao Wei, Hanjiang Hu, Luca Marzari, Kai S Yun, Peizhi Niu, Xusheng Luo and Changliu Liu

Conference on Computer Aided Verification, 2025

Certifying Robustness of Learning-Based Pose Estimation Methods

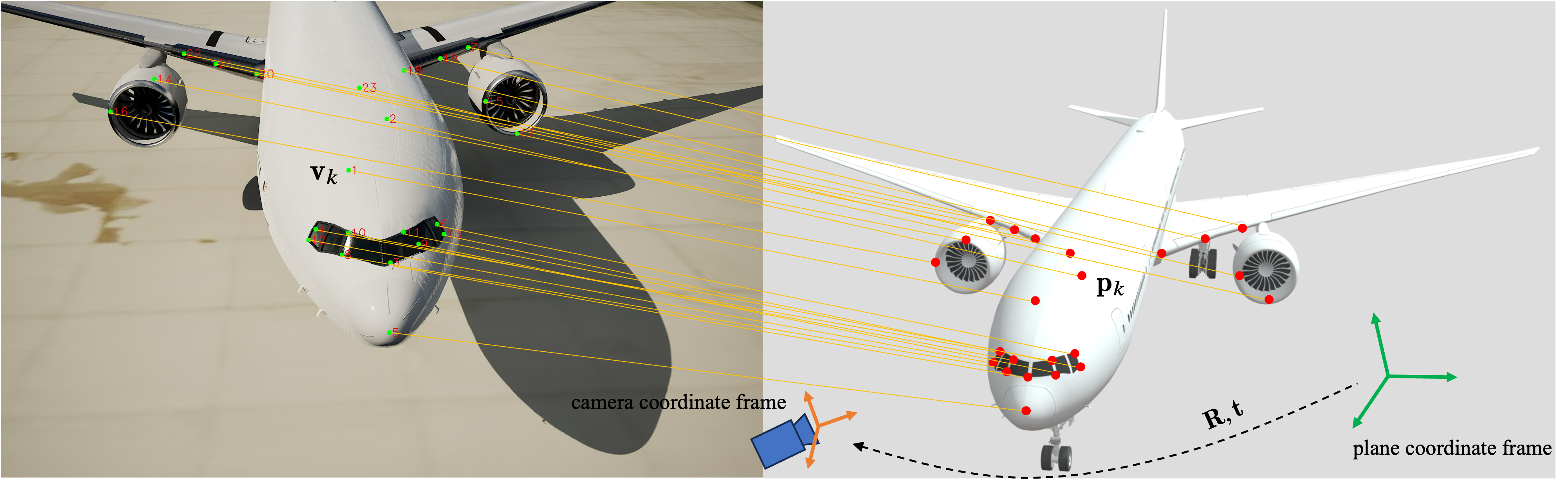

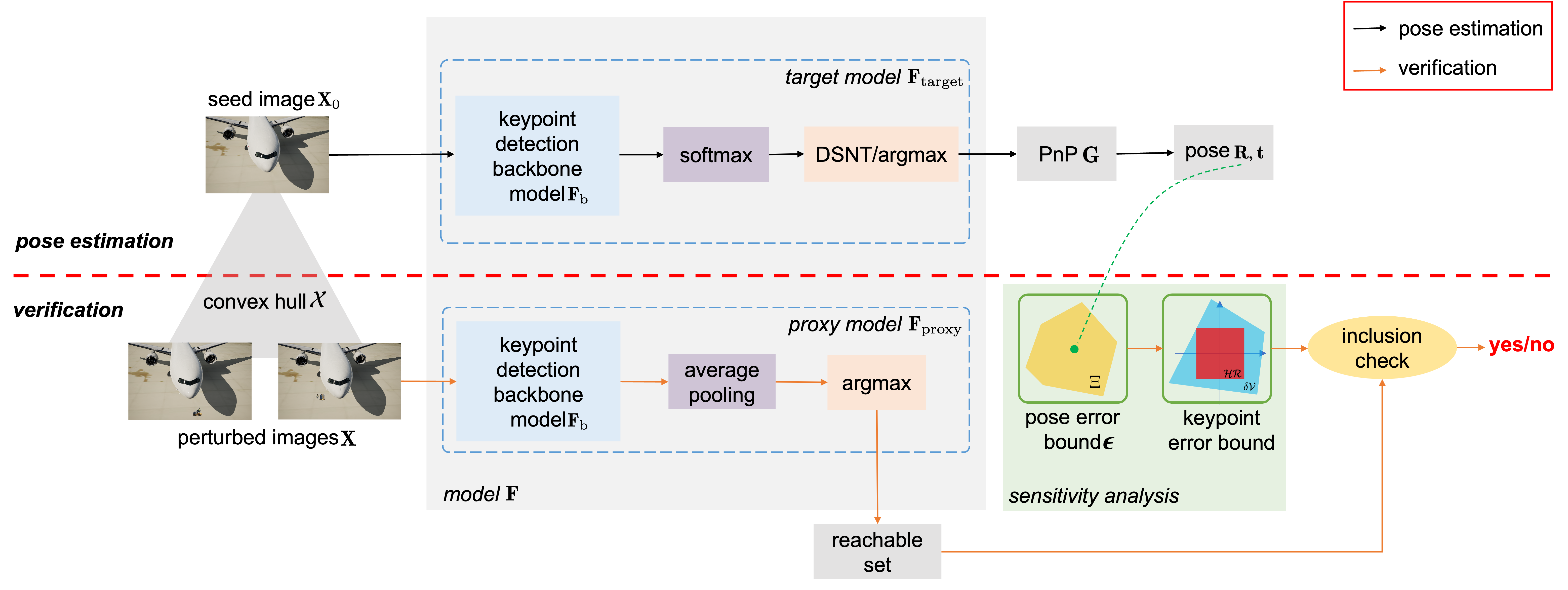

In the realm of computer vision, vision-based 6D object pose estimation, i.e., 3D rotation and 3D translation of an object with respect to the camera, serves as a pivotal method for identifying, monitoring, and interpreting the posture and movements of objects through images. Despite the increasing efforts to boost the empirical robustness of these methods against challenges like occlusions, fluctuating lighting, and varied backgrounds, the focus on validating or certifying the reliability of vision-based pose estimation systems remains minimal. The absence of performance assurances for these frameworks raises concerns about their integration into safety-critical applications. In this work, our objective is to certify the robustness of learning-based keypoint detection and pose estimation approaches given input images. We focus on the aspect of local robustness, which refers to the ability to maintain consistent performance or predictions when the input data is perturbed around a given input point. The core question is determining whether pose estimation stays within an acceptable range when the input image undergoes perturbations. To the best of our knowledge, this study is the first one to certify the robustness of large-scale, keypoint-based pose estimation problem encountered in the real world.

Contributors: Xusheng Luo, Tianhao Wei, Simin Liu, Ziwei Wang, Luis Mattei-Mendez, Taylor Loper, Joshua Neighbor, Casidhe Hutchison.

Contributors: Xusheng Luo, Tianhao Wei, Simin Liu, Ziwei Wang, Luis Mattei-Mendez, Taylor Loper, Joshua Neighbor, Casidhe Hutchison.

Publications:

-

[J30] Certifying Robustness of Learning-Based Keypoint Detection and Pose Estimation Methods

Xusheng Luo, Tianhao Wei, Simin Liu, Ziwei Wang, Luis Mattei-Mendez, Taylor Loper, Joshua Neighbor, Casidhe Hutchison and Changliu Liu

ACM Transactions on Cyber-Physical Systems, 2025

Sponsor: The Boeing Company

Period of Performance: 2023 ~ 2024

Point of Contact: Xusheng Luo, Tianhao Wei